Trend Following Primer Series – A Systematic Workflow Process Applied to Data Mining – Part 14

Primer Series Contents

- An Introduction- Part 1

- Care Less about Trend Form and More about the Bias within it- Part 2

- Divergence, Convergence and Noise – Part 3

- Revealing Non-Randomness through the Market Distribution of Returns – Part 4

- Characteristics of Complex Adaptive Markets – Part 5

- The Search for Sustainable Trading Models – Part 6

- The Need for an Enduring Edge – Part 7

- Compounding, Path Dependence and Positive Skew – Part 8

- A Risk Adjusted Approach to Maximise Geometric Returns – Part 9

- Diversification is Never Enough…for Trend Followers – Part 10

- Correlation Between Return Streams – Where all the Wiggling Matters – Part 11

- The Pain Arbitrage of Trend Following – Part 12

- Building a Diversified, Systematic, Trend Following Model – Part 13

- A Systematic Workflow Process Applied to Data Mining – Part 14

- Put Your Helmets On, It’s Time to Go Mining – Part 15

- The Robustness Phase – T’is But a Scratch – Part 16

- There is no Permanence, Only Change – Part 17

- Compiling a Sub Portfolio: A First Glimpse of our Creation – Part 18

- The Court Verdict: A Lesson In Hubris – Part 19

- Conclusion: All Things Come to an End, Even Trends – Part 20

A Systematic Workflow Process Applied to Data Mining

In our previous Primer we outlined how we will be using the Scientific Method as a basis to validate our hypothesis (below) derived from how we interpret a trending ‘liquid’ market to behave.

The Hypothesis

“That we can construct a Diversified Systematic Trend Following Portfolio that, targets the tails of the distribution of market returns (both left and right), is adaptive in nature, and through its application across liquid markets can deliver powerful risk adjusted returns over the long term in a sustainable manner.”

We specifically narrowed our hypothesis to only assess:

- a particular market condition (fat tailed trending condition);

- for bi-directional trend following strategies;

- which are applicable to any liquid market (liquid and diversified); and

- that our trend model needs to be adaptive in nature (non-stationery).

Having such a narrow hypothesis helps us significantly reduce our data mining efforts and simplify our experiment, enabling us, subject to validation of our hypothesis, to create sustainable adaptive portfolios.

Data Mining itself can be defined as a process of extracting information from large data sets from which we can then transform this information into a coherent structure for future use. This is exactly what we want to achieve through the testing of our hypothesis.

We want to mine historical data sets (a diversified array of liquid market data), to evaluate whether or not trades undertaken by adaptive systems designed to capture ‘fat tailed’ trending conditions have been able to generate sustainable returns.

This entire process that we undertake seeks to identify a possible causal relationship between the systems we deploy, which we define as trend following systems (but we could be wrong), and their ability to catch major directional anomalies (which we observe in the real markets).

The ‘proof statement of this hypothesis’ lies in our ability to generate an edge through our diversified portfolio of systems that is sufficient for compounding to then take over and then do its magic by generating long-term sustainable returns.

If we can demonstrate a ‘causal relationship’ beyond a mere random happenstance between our systems ability to extract an enduring edge which is suited to compounding, then we have validated our hypothesis. That is all there is to it.

Avoid the Tendency to Want More – It just ends in Tears

Our hypothesis is so simple and if we stick strictly to validating it, then no problems, however that is not what really happens at all when we get computer power behind us with our predisposition towards instant gratification.

We always want more.

We want the ‘best systems’ which boast stunning performance metrics. But answer me this….where in our hypothesis were we requesting the best outcome?

That was an extra layer that your brain instantly added onto this exercise and with it come associated problems. Namely unrealistic backtests and aggressive optimisation approaches that ‘murder the data’ simply to achieve the best results, not to test the validity of our hypothesis.

But wait…. there’s still more murder on the trading floor. Now that we have optimised and riddled our validation process with selection bias, we now suspect, rightly so, that we have murdered the data, so we then add further ‘data murdering tools’ like Monte Carlo approaches and Walk Forward techniques which attempt to remove the optimisation bias that we have now injected into our method. What a mess?

These unnecessary complications might give us what we want on paper, with a beautiful back-tested equity curve reaching for the heavens, but they are certainly not what the Market intends to deliver to us when we take these creations to the live environment. The Market just wants to give us a very painful lesson in how very ‘wrong’ we are.

Through this added layer of complexity that we have unnecessarily ‘forced into our thesis’ we now find ourselves so far departed from original hypothesis with which we wanted to validate.

We have explicitly stated in earlier Primers that we are only seeking a ‘weak edge’ as that is all that is needed for compounding to then take over and do the heavy lifting. Other more impressive quantitative scientists have already done the hard yards in the academic literature and have already demonstrated that a weak edge exists through their market studies.

But now given our ‘apelike tendencies where we want the banana’, we turn our backs on these empirical studies from our desires to obtain the ‘strongest edge’? Something smells like a “Charlatans trap” here.

Hopefully, you may now see the rut we fall into, when we steer away from our primary objective by letting our desires run rampant.

Avoid the tendency to want more than what the markets are prepared to deliver. It just ends in tears.

We are Seeking Proof of a Causal Relationship, Not a Statement about Future Expectancy

All we want is do is determine that there is a strong likelihood of a causal relationship between the trend following systems we use, honed from our philosophy about how the markets behave and that this causal relationship can be inferred from our portfolio’s long term performance record.

If so, then this correlated performance relationship between our systems/portfolio and historical market data has a better chance ,(than a random relationship), of enduring into an uncertain future. We can be sufficiently confident in deploying our portfolios into an uncertain future, where we suspect ‘fat tailed conditions’ MAY arise.

Future performance is primarily dictated by the Market and NOT our systems/portfolios. Our systems can of course turn this into a nightmare but optimal performance is capped by the market. Not an optimisation.

The best we can do is develop systems that respond to the Markets beck and call….and this relationship of causality between our systems and the market are not stationery and can vary over time.

The systems we deploy, must be able to adapt to respond to the dynamic relationship that exists between our systems and the market. Complex markets are constantly changing and adapting. So should our systems. The market trends that we have targeted over the historical record have changed. As a result, our workflow process must adopt an adaptive element into the procedural architecture.

So let’s get our helmets on and get back to work. Down t’ut pit we go.

It’s Off to Work we Go….with a Workflow

A workflow process can be defined as a sequence of tasks that process a set of data. It typically comprises a sequence of systematic processes that can be repeated and is arranged in a procedural step by step manner to process inputs into outputs, or raw data into processed data.

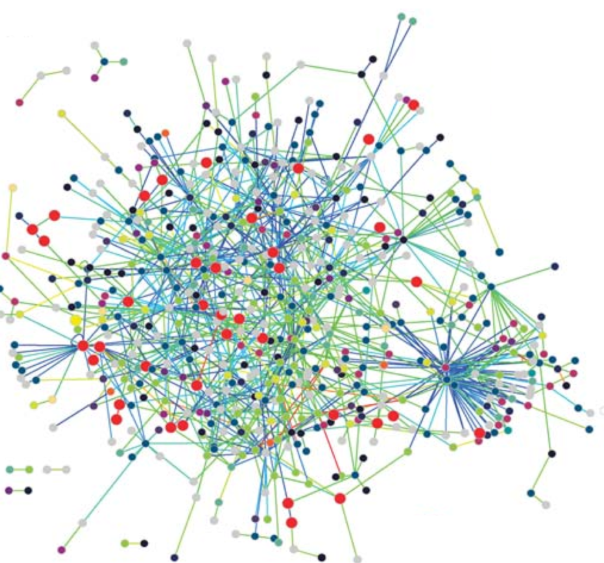

Figure 1 below describes a workflow that we use at ATS to validate our trend following models. Our process turns “in sample raw data into robust trend following portfolios with an edge”. The workflow sits snugly within our broader scientific method. Having passed through the workflow, we have a completed portfolio which we can then test over very long-range ‘unseen’ market data (referred to as Out of Sample Data OOS)

We try to keep our processes very simple.

I often have a giggle when trying to understand the quantitative mindset. They are very tough minds to understand as there is chaos in their process. While there is a general consensus out there in quant world, that it is best to keep systems simple, as this simplicity allows for robustness, the discussion then turns more ‘arcane’ about degrees of freedom and then before you know it the discussion heads into it the most complex and devious statistical add-ons to complicate the once simpler process.

In this confusing discussion about advanced statistical concepts and techniques, we are left scratching our heads in a quagmire of complex processes where huge gaps exist in the coherent train of thought which is meant to reside within our factory processes.

This is where a workflow comes to the rescue. It forces us to explicitly declare the processes we will be undertaking to test our hypothesis in a coherent logical manner, Step 1 leads to Step 2, leads to Step 3 in a logical procedural basis. If there are any queer complex steps in our process, we will see it in our workflow process. There just won’t be a coherent flow.

Like the processes of a steel producer, a workflow is a description of the processes that take the iron ore and process it into steel.

So if we look to Figure 1 below, we can see a diagrammatic representation of a workflow process we use at ATS.

You will see that it comprises 5 procedural steps that process the data to produce trend following portfolios. The processes include methods to develop and test for:

- system design and development

- system robustness;

- recency (adaptability);

- sub portfolio (market) long term historical performance; and

- portfolio long term historical performance.

Figure 1: Diagrammatic Representation of Testing Process Including Workflow

We will be using such a sequence of steps (a workflow) to “let the data speak for itself” with minimal discretional input, to play a dominant role in portfolio development.

Being able to repeat the procedures undertaken in our data mining exercise is an essential pre-requisite so that we can do it again if it is necessary and allow others to apply the same processes to validate the model. Furthermore, repeatability allows for the addition, editing or removal of steps at a later date to expand, amend or adjust the experimental process if required.

To assist in our strong preference for systematic and repeatable processes, we use algorithms themselves to undertake the sequential processes of data mining. Each step in the process is assigned to an algorithm that is responsible for undertaking the operation , but we might need a human brain along the way to define a scope within which each algorithm can operate. Such as the scope and range of the market universe we test across, the range of variables we use to test a strategy parameter, the degree of processing to be undertaken and any threshold values assigned to processes.

A workflow process can therefore be likened to an ‘experimental process’ used by a scientist to test a model and it must be documented so that it can be peer reviewed, replicated, amended and possibly improved.

Fortunately, when using a systematic workflow method, the documentation of the experiment is quite a straightforward matter. Data files used for processing, settings applied to each algorithm, the code of execution for each algorithm and process reports and outputs are all saved and filed away for future reference.

Dependent on your coding skills, you can either develop your own workflow algorithms, or if coding skills are absent, then you could use 3rd party data mining software for these purposes. However, it is far preferred to use your own ‘inhouse coding method’ as the workflow process then can be specifically tailored to your hypothesis. As good as some of the 3rd party software is, there are always deficiencies in which you therefore need to develop workarounds for.

This does not preclude your ability to undertake these processes without algorithms, and this can be achieved manually, but given the data crunching that needs to be undertaken through a rigorous workflow process, using algorithms under PC power vastly accelerates the process. To undertake data mining across extensive data sets requires intensive computer power (both in terms of processing speed and memory). It is strongly recommended that you choose the systematic workflow path.

Those Dreaded Words……Back-testing and Curve Fitting

Now you will notice so far in our discussion that the term backtest is noticeably absent. This is due to the bad rap that backtests have experienced due to their frequent erroneous application that is riddled with bias.

Backtesting is NOT a process undertaken to predict future returns. Rather a backtest is simply an empirical process used to validate our hypothesis using actual data, (or more explicitly what is known).

It is useful for testing conclusions about a systems robustness or adaptability over historical data, but that is where it ends. We do use backtests extensively as a basis to evaluate our trend following models for their robustness and adaptability using historic data, but we never use them as a basis of future expectation.

Backtesting is just a small part of a deeper process of system design. A system needs to be designed first that is configured to target a desired market condition (such as a trending condition) using logical design principles that embed causation into the derivative relationship between the system and that market condition. A backtest is then used on ‘unseen data’ to evaluate the strength of this causal relationship and whether your system does its desired job.

If markets don’t trend, then your system doesn’t perform. If markets do trend, then your system performs. There is an explicit connection between system and trending condition developed through system design principles.

Curve fitting (also known as data snooping) arises from a trader first observing data before developing their system. The system is configured to a data set that may be simply a random perturbation. You then undertake your backtest and lo and behold it performs admirably but there is no causal relationship between your system and a ‘real market feature of enduring substance’. The system therefore falls over with ‘unseen data’.

The problem with an approach such as this is that there is no logical reasoning in this process that attaches a causal relationship between the design of the system that is being evaluated under a backtest and that observed pattern of behaviour. With an absence of any causal relationship, we have no hope of receiving any conferred benefit from future unseen data.

We explore ‘curve fitting’ in a future Primer as it is essential that we know how to identify it, so we can avoid it like the plague.

In our next Primer we will get into the first step of the Workflow process, namely the System Design Phase. This is where we don our creative trend following hats and devise systems that obey the Golden Rules of Trend Following before we commence assessing their merit using market data.

So stay tuned to this series.

Trade well and prosper

The ATS mob

19 Comments. Leave new

[…] A Systematic Workflow Process Applied to Data Mining – Part 14 […]

[…] A Systematic Workflow Process Applied to Data Mining – Part 14 […]

[…] A Systematic Workflow Process Applied to Data Mining – Part 14 […]

[…] A Systematic Workflow Process Applied to Data Mining – Part 14 […]

[…] A Systematic Workflow Process Applied to Data Mining – Part 14 […]

[…] A Systematic Workflow Process Applied to Data Mining – Part 14 […]

[…] A Systematic Workflow Process Applied to Data Mining – Part 14 […]

[…] A Systematic Workflow Process Applied to Data Mining – Part 14 […]

[…] A Systematic Workflow Process Applied to Data Mining – Part 14 […]

[…] A Systematic Workflow Process Applied to Data Mining – Part 14 […]

[…] A Systematic Workflow Process Applied to Data Mining – Part 14 […]

[…] A Systematic Workflow Process Applied to Data Mining – Part 14 […]

[…] A Systematic Workflow Process Applied to Data Mining – Part 14 […]

[…] A Systematic Workflow Process Applied to Data Mining – Part 14 […]

[…] A Systematic Workflow Process Applied to Data Mining – Part 14 […]

[…] A Systematic Workflow Process Applied to Data Mining – Part 14 […]

[…] A Systematic Workflow Process Applied to Data Mining – Part 14 […]

[…] A Systematic Workflow Process Applied to Data Mining – Part 14 […]

[…] A Systematic Workflow Process Applied to Data Mining – Part 14 […]